Most A/B testing tools are built for marketing teams with budgets. If you’re a solo operator running WordPress, your options are a $50-200/month SaaS product, a JavaScript-based plugin that makes your page flicker, or not testing at all.

Most people pick option three.

I picked option four: build it myself. Server-side traffic splitting, conversion tracking, statistical confidence calculations, a dashboard with charts – all running inside WordPress with zero external dependencies. Built in a single session with Claude Code.

This isn’t a tutorial on how to build the same plugin. It’s about when building your own tools makes sense, what the process actually looks like with AI-assisted development, and why ownership matters more than features.

The problem with existing tools

A/B testing is conceptually simple. Split traffic between two versions of a page. Track which one converts better. Pick the winner. Done.

The tools, however, are anything but simple.

SaaS solutions like VWO, Optimizely, and Convert charge monthly fees that scale with traffic. That pricing model makes sense for enterprise teams running dozens of simultaneous tests across millions of pageviews. It makes zero sense for a solo founder testing two versions of a landing page that gets 500 visits a month.

Then there are the JavaScript-based solutions – free or cheap plugins that manipulate the page after it loads. The problem: your visitor sees the original page for a fraction of a second before the script swaps in the test variant. That flicker isn’t just ugly. It can affect test results, because the visitor’s first impression doesn’t match what they end up seeing.

And then there’s the dependency angle. Every external tool is a relationship you’re entering. Pricing changes. Features get removed. Products get acquired or shut down. Google Optimize – probably the most popular free option – was discontinued in 2023. Everyone who relied on it had to scramble.

For a simple landing page test, the cost-benefit math doesn’t work. You don’t need multivariate testing, audience segmentation, or a visual editor. You need to know if version A or version B converts better. That’s it.

What I built instead

A WordPress plugin that does exactly what’s needed and nothing more.

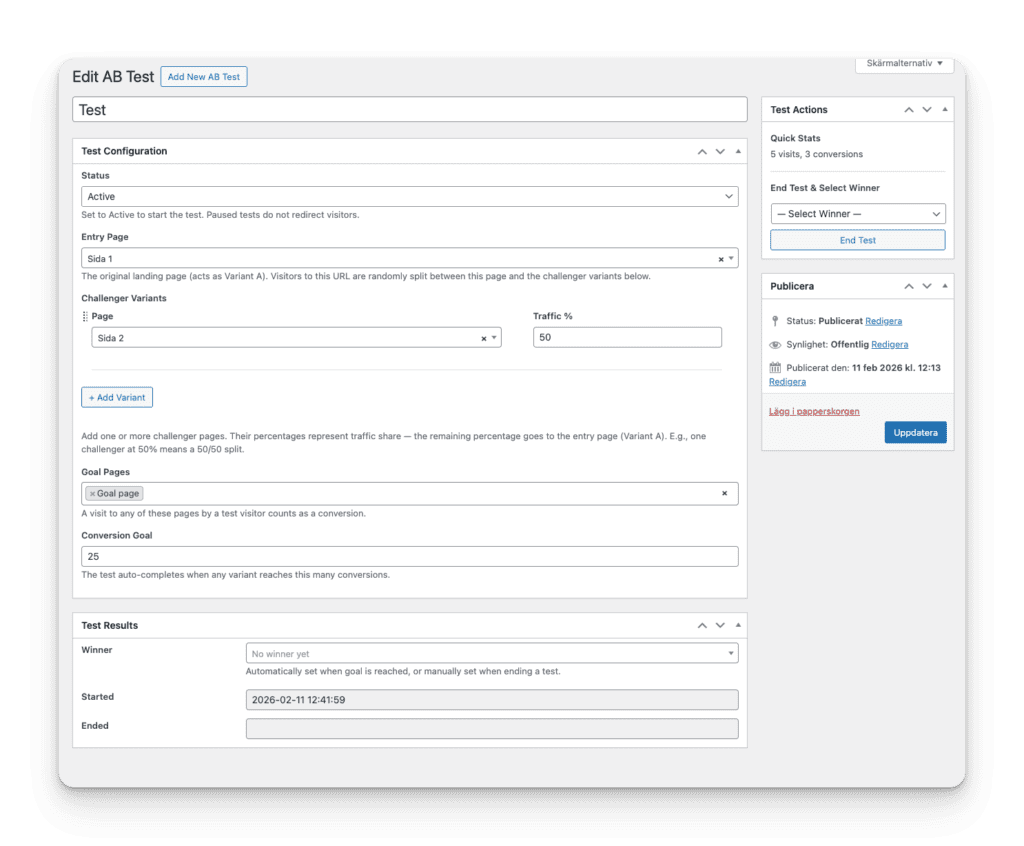

The core flow: select your current landing page as the entry page (it becomes Variant A automatically). Add one or more challenger pages with traffic percentages. Define what counts as a conversion – typically a thank-you page. Set a conversion goal. Activate. The plugin handles the rest.

Traffic splitting happens server-side, at the earliest possible point in the WordPress loading sequence – before the theme, before other plugins, before anything renders. Visitors assigned to the original see their page load normally with zero overhead. Visitors assigned to a challenger get an instant HTTP redirect. No JavaScript. No flicker. No performance hit.

Each visitor gets a cookie that locks them to their assigned variant for the duration of the test. A separate anonymous UUID cookie handles deduplication – same visitor, same hash, counted once. The hash uses SHA-256 with a server-side salt, so it’s deterministic but not reversible. No PII stored, ever.

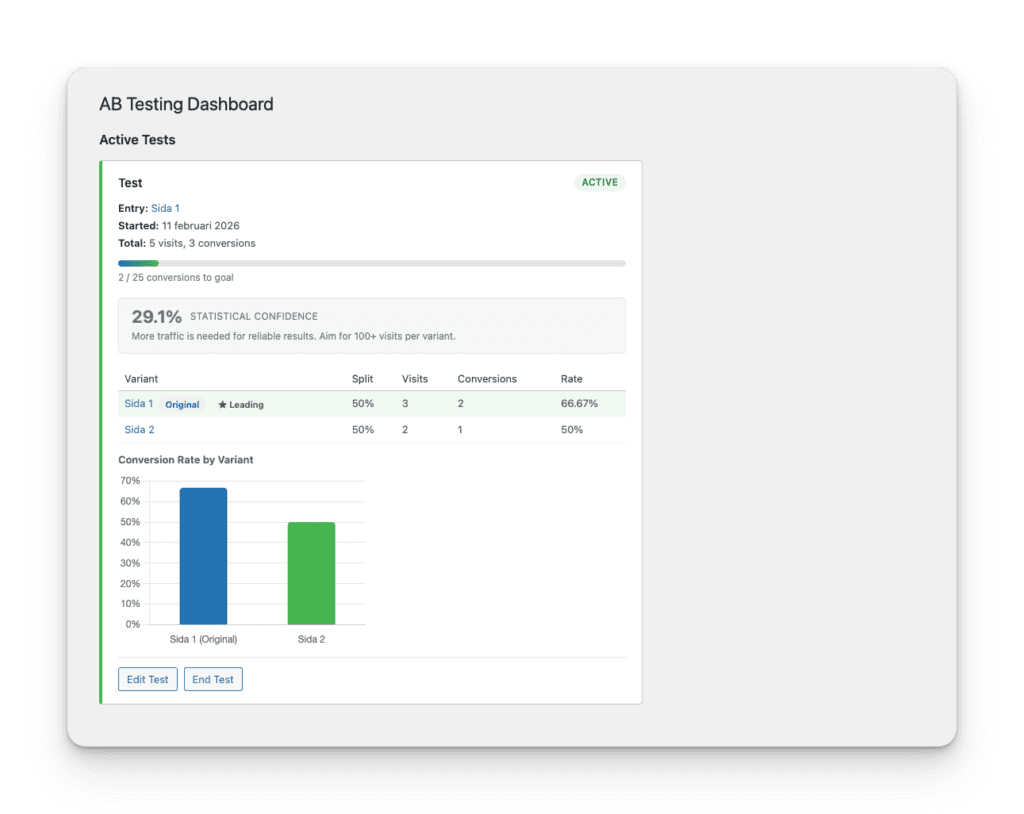

The dashboard shows everything that matters: visits and conversions per variant, conversion rates, a progress bar toward the goal, and a statistical confidence indicator using a two-proportion z-test.

Bot filtering checks every request against 70+ known patterns before doing anything. Logged-in users are excluded automatically. When a variant hits the conversion goal, the test auto-completes and all traffic redirects to the winner.

It’s not fancy. It’s correct.

Why build instead of buy

The obvious argument for building your own tool is cost. No monthly fee, no per-pageview pricing, no surprise invoices. That’s real, but it’s not the interesting argument.

The interesting argument is ownership.

When you use a SaaS tool, you’re renting capability. The tool works until the company changes its pricing, deprecates a feature, gets acquired, or shuts down. Your data lives on someone else’s servers. Your workflow depends on someone else’s roadmap.

When you build your own tool, you own it. The data stays in your database. The tool does exactly what you need it to do. If you want to change something, you change it. There’s no support ticket, no feature request, no waiting for the next release cycle.

For a solo business, that matters more than most people realize. Every external dependency is a point of fragility. Not all dependencies are worth eliminating – you probably shouldn’t build your own email server. But for a bounded, well-defined problem like landing page A/B testing? The build-vs-buy math tilts heavily toward build.

Especially now.

AI changes the build-vs-buy equation

Two years ago, building a custom A/B testing plugin would have been a multi-week project. Even with solid WordPress development experience, the statistical calculations, the cookie handling, the bot filtering, the dashboard with charts – that’s a lot of code.

With Claude Code (Opus 4.6), it was a single intensive session.

Not because AI wrote perfect code on the first try. It didn’t. We hit bugs – PHP garbage collection silently destroying objects before their callbacks fired, a hash function that gave every visitor the same identifier, a Chart.js canvas that grew infinitely because of a CSS Grid interaction. Each bug required systematic debugging, hypothesis testing, and understanding why something was broken, not just that it was broken.

But the speed of iteration was dramatically different. Instead of spending hours writing boilerplate, I spent that time describing behavior and reviewing solutions. The ratio of thinking to typing shifted heavily toward thinking. And the thinking is the part that actually matters.

Here’s what’s important though: domain knowledge isn’t optional. AI can write excellent code, but it doesn’t know what you’re trying to build or why. You need to know enough about the problem domain to ask the right questions, evaluate proposed solutions, and catch when something looks correct but isn’t. The Meta Box plugin stores multiple values as separate database rows instead of a serialized array – Claude didn’t know that. I did, because I’d worked with Meta Box before. That kind of specific, experience-based knowledge is where humans still make the biggest difference.

The implication for solo builders is significant. If you have domain expertise and a well-defined problem, AI-assisted development lets you build tools that would have been impractical to build alone. Not because you couldn’t write the code – but because the time investment didn’t justify it. That calculation has changed.

When this makes sense (and when it doesn’t)

Building your own tools isn’t always the right call. Here’s how I think about it.

Build when: the problem is bounded and well-defined, you have domain knowledge, the tool is stable over time (doesn’t need constant updates for external API changes), and existing solutions are either too expensive, too complex, or too dependent on third parties.

Don’t build when: the problem requires ongoing maintenance against moving targets (like social media API integrations), you lack technical understanding of the domain, a free or cheap solution exists that’s genuinely good enough, or the time to build exceeds the value you’d get from having the tool.

A/B testing for WordPress landing pages hits every criterion in the “build” column. Bounded problem. Clear inputs and outputs. Stable over time – HTTP redirects and cookies haven’t changed in decades. And the existing solutions either cost too much or compromise on fundamentals like server-side splitting.

The key word is bounded. If you find yourself saying “and then I’d need to add…” more than twice during planning, the scope is probably too large. Build the smallest useful version. Ship it. Use it. Expand later if you actually need to.

The ownership principle

This isn’t really about A/B testing. It’s about a principle that applies across your entire digital infrastructure.

Your website should live on hosting you control, not a website builder that can change its terms. Your email list should be yours, not locked inside a platform that could raise prices or shut down. Your content should live on a domain you own, not exclusively on social media.

And your tools – when it’s practical – should be yours too.

Not everything. Not at any cost. But when the build is feasible and the problem is clear, ownership beats convenience every time. Because convenience has a hidden cost: dependency. And dependency compounds.

The tools for building your own solutions have never been more accessible. That doesn’t mean everyone should build everything. It means the threshold for “should I build this?” has dropped significantly. And for solo operators with domain expertise, that’s worth paying attention to.